Knowledge Base

Can I use the Cleanplates of Cyclorama in another engine?

Since the Reality has Image Based Keyer, the image that is taken from a specific camera, with a specific lens which might has a different Lens Center Shift Value will differ in each separate system. We recommend that you take the clean plates separately for each engine to make sure that you get the best result from each engines.

I have different lighting setups in my studio. Which lighting is the best to take clean plates for Cyclorama?

As Reality uses Image Based keying, it is important to take the captures to be used as Clean plates while you have the general lighting. It is advised to test and compare the result and to take specific captures for Clean plates if you observe a drastic change in the keying quality when you change the lighting setup in your physical studio. But if you are using just talent lighting in close shots, this might night be a need. Please visit the following topic: What is Image Based Keying?

How to use FlyCam?

Fly Cam allows you to offset your Render Camera using your custom Actor transform. This approach can be useful, especially for fly-in and fly-out type camera animations.

Before you begin make sure that:

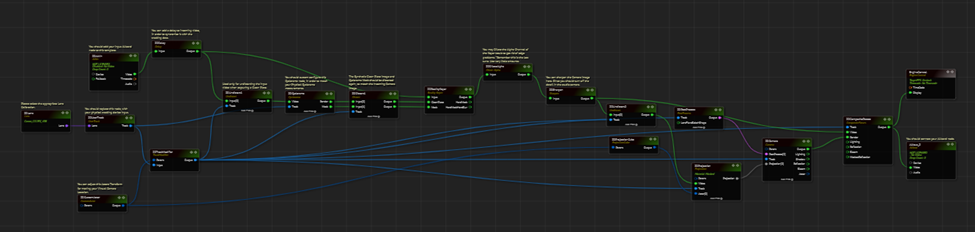

- Video I/O connections are done by adding

AJA Card,AJA InandAJA Outnodes and selecting inputs and outputs accordingly. CycloramaSize, andLocationproperty values are defined.Cycloramaprojection is added by going to the Function tab of theCycloramanode and clicking on theAdd Projectionbutton

Hint: If you have a camera tracking system, replace ZDTLNT_CameraTrack node with a relevant tracking node.

To utilize it:

- Launch your Project via Reality Hub.

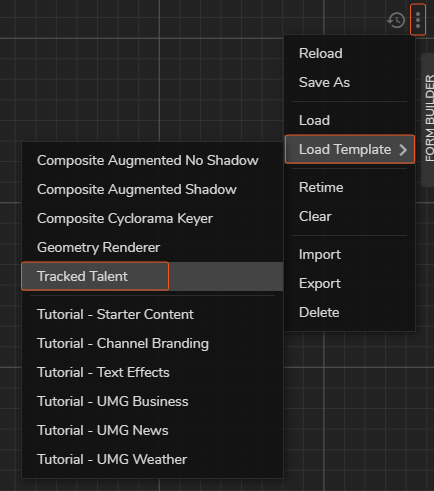

- Go to the Nodegraph Menu > Load Template > Tracked Talent

- Create a new Action via Actions Module.

- Select the

ZDTLNT_FLY_Offsetnode, right-click on theTransformproperty circle, and select the Timeline. - Go to the first frame in the Actions module, right-click on the

ZDTLNT_FLY_OffsetTransformvalue, select the Add Keyframe - Move the timeline Playhead to a position you desired on the Time Ruler

- Deselect the first keyframe

- Change the

TransformvaluesZDTLNT_FLY_Offseteither by using GamePad or typing manually - Right-click on the

ZDTLNT_FLY_OffsetTransformvalue, select the Add Keyframe - Go to the Action module Animation Interpolation section and select an interpolation type

Hint: You can select an animation interpolation type for every individual keyframe

- Click on the

Action Playbutton to check your animation

What is DilateRGBA node? How can i use it?

Basically, it is a shrinking process on RGBA Channels separately. This node is used for overcoming the edge problems caused by spill and camera artifacts. The DILATE ALPHA node must be used.

We have finished the tracking device setup in our studio. How can we make sure if the tracking is well calibrated?

Please go to your Tracking node, and make sure that CCD of the camera is situated in the zero point of the physical set. After making sure that the camera sensor is in zero point, you should check if moving the camera within 2 meters range to both negative and positive directions. Also the pan, tilt and roll values should be measured.

I am connected to my device via Teamviewer and why doesn't Reality Editor launch?

You might have downloaded and installed Reality without any issues via Teamviewer session. But Reality Editor requires by design that the monitor is always open and never turned off. You might choose a KVM configuration as well.

What is GPUDirect and how to enable it in Reality Engine?

GPUDirect is optimized pipeline for frame-based video I/O devices. The combined solution delivers the capability to maximize the performance capability of the GPUs. GPUDirect for Video is a technology that enables to take faster advantage of the parallel processing power of the GPU for image processing by permitting industry-standard video I/O devices to communicate directly with NVIDIA professional Quadro GPUs at ultra-low latency. Enabling GPUDirect in Reality engine, please refer to the link here.

Does Reality Engine support hardware Fill and Key?

Yes. It is possible to get Fill and Key with AJA card’s physical ports with the introduction of pixel format properties. This will allow possibilities to send Fill and Key video channels over independent SDI ports from Reality as or can accept Fill and Key channels from other CG systems to use as DSK. See Fill and Key

Does Reality Engine support Real-time Data driven graphics?

Yes. Reality Engine comes with a UMG based sample project with real-time data driven graphics for live football scores. JSON representation is used in the project to fetch real-time data updates.

Why cant I see Level Sequencer option in Create menu on node graph?

Level sequencer created in other versions must be reopened in current version of Reality Editor and click on "Save" to get compiled to the current version.

See Level Sequencer

How can I mask AR reflections?

While using a second camera for AR pipelines to have reflection of the AR graphic on real world floor, and exclude some real areas for casting reflections on, please create a 3D model of places where you do not want to see reflections, then connect it as a separate actor for the projection and connect to showonly pin of the reflection camera as shown below.

Use REFLECTION pin of the COMPOSITE PASSES instead of MASKEDREFLECTION pin which had been used before while using second reflection camera at AR pipelines.

How to Create Hybrid Studio Rgraph?

Hint: Before you begin with this section, ensure your Cyclorama setup is ready. For more details, please visit the Cyclorama Setup section.

This section covers creating Hybrid Studio RGraph. Hybrid studio configuration is a 3D Mask topic. We define the mask type according to the color of the graphic. There are 4 distinct colors that we can define as mask areas.

- Black: Only graphic

- Cyan: Video output

- Red: Keying area

- Yellow: Spill suppression

To create Hybrid Studio please follow the instruction below:

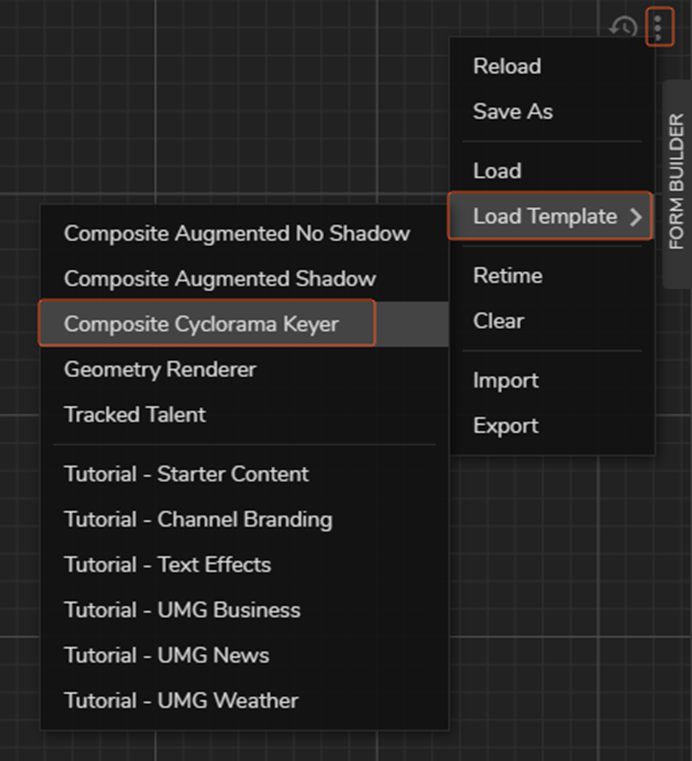

- Go to Nodegraph Menu > Load Template > Composite Cyclorama Keyer

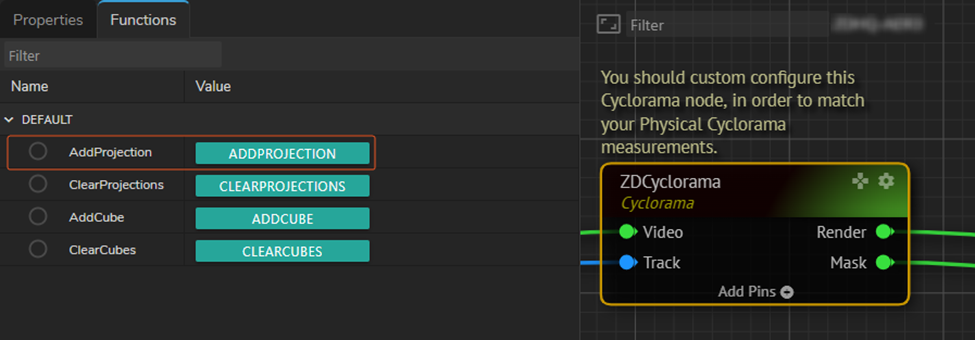

- Select the

Cycloramanode, go to its Functions tab, click on theAddProjectionbutton

- If you connect the Mask output pin of

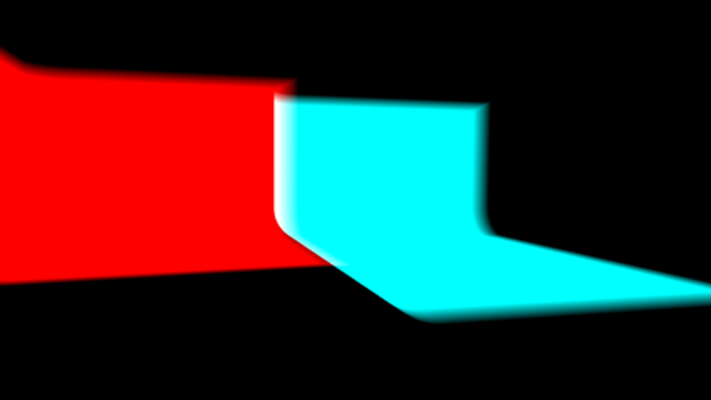

Cycloramanode to theChannelinput of theMixernode, you can see the mask output as in Figure 1 which means this area will be keyed, if the model or object gets into the green box. The black area will be full of graphics.

-Figure 1

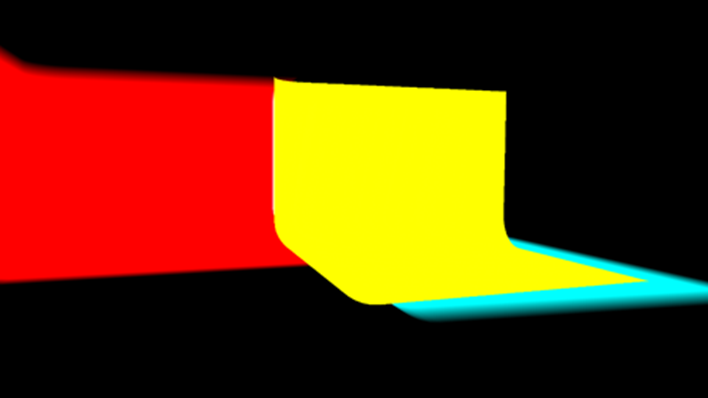

- We define the borders of our virtual environment. Now it is time to define the real area. To do that, create a new

Cycloramanode. Change theMask Colorto Cyan. Now, we have two different mask types in our configuration, we need to merge them withMergenode. You will see the two different masks in the channel output when you merge them. Change the Video mask cyclorama transform values and bring it near to the first cyclorama. You can follow various scenarios depending on your real studio configuration.

-Figure 2

- Most probably, you will see a green spill on the output. To eliminate this, duplicate the Video mask Cyclorama and change the mask color to yellow. Just like the Cyan mask, you need to connect the

Maskoutput pin to theMergeNode.

-Figure 3

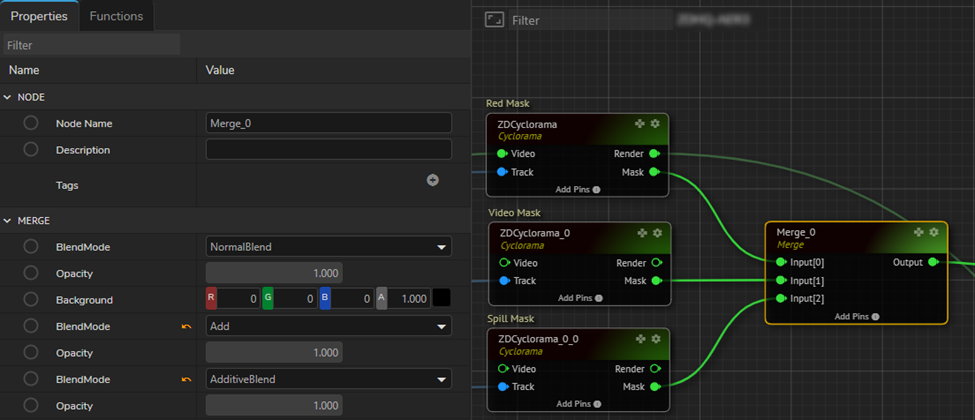

- Merge mode properties and mask connections must look like in Figure 4.

-Figure 4

The hybrid studio is almost ready, but we also need to make some adjustments in order to get ready for the demonstration or On air show. There might be problems on the edges of masks. There are a couple of ways of adjusting the masks. It depends on how accurate the tracking or lens calibration is. You can change the smoothness of Cyclorama like 0.001 and/or change CleanplateFOV, or another way is opening the capture via mspaint that you took for clean plate before, extending the green area and load again under the cyclorama node.

How to make a basic teleportation?

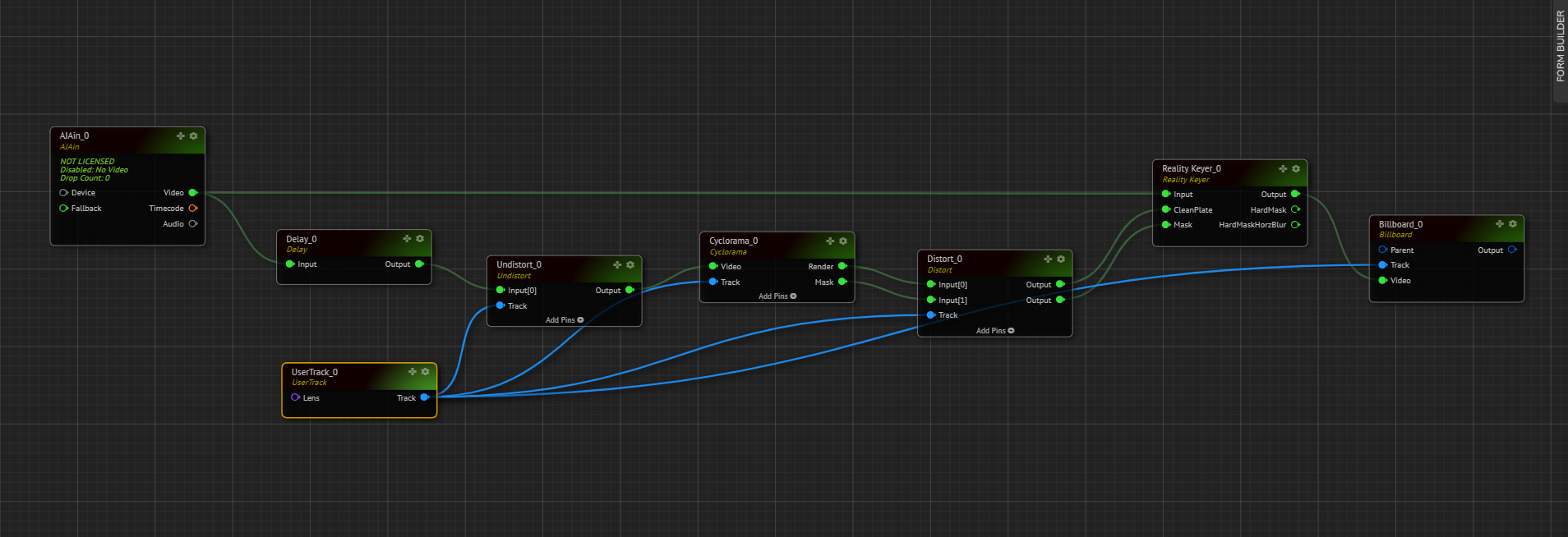

Easiest and most basic teleportation setup is possible using Billboard Node found under UE Actor Nodes. Follow the steps below to create a basic teleportation in your projects;

- You need to key the talent separately you’re willing to teleport and feed this keyed video to the Video pin of the billboard node.

- Connect tracking pin of the billboard node to your real tracking.

- Enable “TalentFacing” property of your billboard node so that virtual billboard will face the camera no matter where your camera moves.

- Position this Billboard to the desired location in your project.

You have few options to give this billboard a teleportation effect.

- You can Enable and disable its "EnableRender" property for an instant appearance and disappearance of your talent

- You can change the scale of the billboard for giving a stretching effect.

- You can change the transform values of the billboard node to give it a sliding effect.

- You can even combine these methods to get the best result that suits your project the most.

You can see a basic Rgraph example below:

How to do Multi-Format video IO with Reality?

In AJACARD node. you can open the hidden tab under Device catagory to reveal UseMultiFormat property. Enabling This Property will allow users to do do Multi-format Input-Output.

UseMultiFormat property on AjaCard Node

*This feature was developed on Reality 2.9. Older versions might not support multi-format Video I/O

How to render background graphics only?

There are two ways to render background separately on Reality Setup.

1st Way:

It is possible to use second virtual camera to render background graphics. Connecting Projection cube to hidden pin of the virtual camera render nodes output will hide the projected video on the render and only background graphics will be rendered.

This way, reflections will not get lost on the final composite output but second rendering will increase the load on the GPU.

Below, there is a screenshot for this process. On Channel 1, ZDCamera node is used to project video and making final compositing. And on Channel 2, ZDCamera_0 node is used to render background graphics only.

Channel1: Final Composite Output

Channel2: Background Graphics

2nd Way:

If losing reflections and refractions are not important for the project, it is possible to use color node with 0 Alpha instead of video on Projection node. Using this method will not project keyed talents on the 3D world and there will be no reflections or refractions of the keyed talents, but GPU load will not increase.

And below, there is a screenshot for this process. Color node with 0 Alpha is connected to the Video input pin of the Projection node. Now, camera render can be used for both compositing and showing background graphics.

Channel1: Final Composite Output

Channel2: Background Graphics

How can I use Timecode Event Trigger node and its functionality?

You can use a TimeCodeEventTrigger node to trigger your events inside your blueprints via using the exact timecodes and queue them. TimeCodeEvent Trigger node is not intended to be used through GUI, it is rather designed to be called through an API to access the values and change the values of this node. Overriding the AJA Timecode FSM is not available as this timecode is a counter of frames since the beginning of year 2000.

How to add a timecode for executing a later event?

Go to Functions tab of the TimeCodeEventTrigger node and type a TriggerTime and click on “Execute” button on AddTime as the screenshot attached. This will add this value to the queue shown in Properties of the same node. The timecode trigger clears the trigger times as soon as it has played the trigger. This is happening because of the functionality of this node. If that timecode passes and as it is past and never going to happen again, this is deleted from the queue.

How to run your project using command prompt on Editor mode?

We can use the command line to run the Reality Engine project.

Follow below;

- Press the Windows button and write Command Prompt on the search tab.

- Write below command on the command line. You should be modified your command according to your installation and project folder structure.

“C:\ProgramFiles\ZeroDensity\RealityEditor\4.26\Engine\Binaries\Win64\UE4Editor.exe” “R:\Reality\Projects\StarterProject\StarterProject.uproject” -game

- Press Enter

Then, it will launch Reality Engine with editor mode.

How to use 3rd party plugin in Reality Editor?

- Please make sure the plug-in and Reality Editor version you are using are compatible (same). You can follow these steps to learn your version of Reality Editor.

- Please open the Reality Editor

- You can see “Reality Editor X.X.X. based on Unreal Editor Y.Y.Y”

Figure 1: Learning the current version of Reality (Unreal) Editor

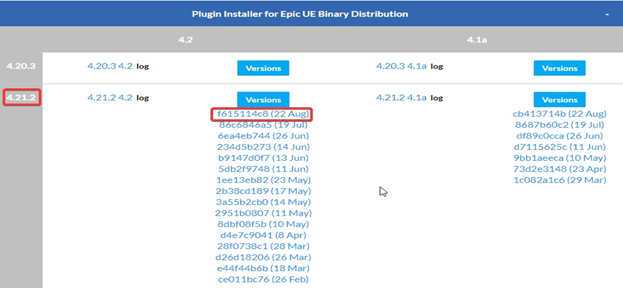

Figure 2: Choosing the correct version of plug-in

- Please download and install the 3rd party plug-in(s) to “C:\Program Files\Zero Density\Reality Editor”

- After the installation, you need the change Build Id of plug-in(s) with manually.

- Please go to the “C:\Program Files\Zero Density\Reality Editor\Engine\Plugins\Marketplace\Substance\Binaries\Win64”

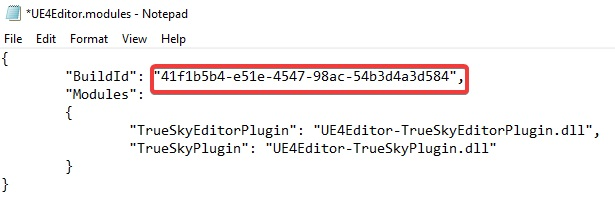

- Right click to “UE4Editor.modules” and open with Notepad

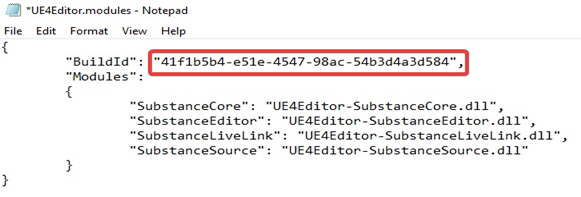

- Copy to “BuildId”

Figure 3: Build ID

- Please go to the “C:\Program Files\Zero Density\Reality Editor\Engine\Plugins” and locate your new plug-in(s)

- After the locate folder(s)

- Please go to the “C:\Program Files\Zero Density\Reality Editor\Engine\Plugins\XXXXX\Binaries\Win64”

- Rightclick to “UE4Editor.modules” and open with Notepad

- Paste/change to “BuildId” and save it

- If you have more than one plug-in(s), you need to repeat these steps for all of them

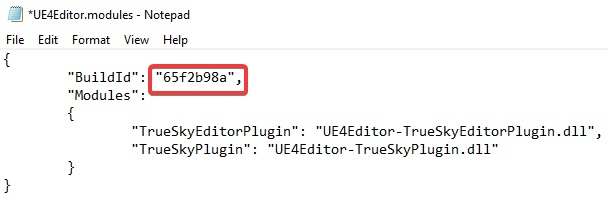

Figure 4: Before the change of Build ID

Figure 5: After the change of Build ID

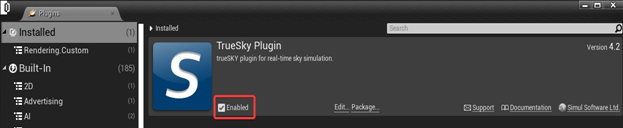

Figure 6: Enable the plug-in(s)