Raw Input

Raw Data is sensor data before any image processing, which is called Bayer pattern/image. The benefit of Raw data, in Reality, is that uncompressed data captured from a camera is then de-Bayered in Reality using the algorithm to provide high-quality video with superior signal for achieving good quality keyer.

In a single-sensor camera, color is produced by filtering each photosite (or pixel) to produce either red, green or blue values most often used is the Bayer pattern. Because each pixel contains only one color value, raw isn’t viewable on a monitor in any discernible way. This means that raw isn’t video.

Raw has to be converted to video for viewing and use. This is usually done through a de-Bayer process, which determines both color and brightness for each finished pixel in the image. The upside to raw video is that no typical video processing has been baked in. The sensor outputs exactly what it sees - no white balance, ISO or other color adjustments are there. This, along with a high bit rate, allows for a huge amount of adjustment possible in Reality.

Working with Raw Video Input in Reality

Before we begin, let's first understand that not all the cameras provide Raw data. There are specific cameras in the market which will deliver Raw, one of them is Panasonic VariCam. The Reality now provides support for VariCam's Raw data to use in the live video production making the keying look more better than ever.

To setup Raw input in Reality, follow the steps below:

Creating Basic Raw Input and Output Pipeline

Launch the project that you intend to work using Raw input.

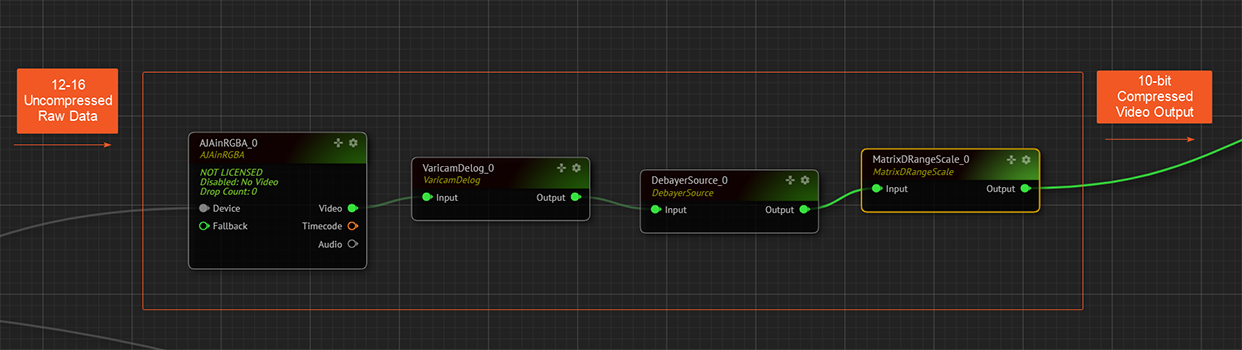

In the Setup tab, on the node graph add the Nodes (Raw data processing nodes) mentioned below in the same order (1-2 and 3).

Delognode. Go to Experimental > VaricamDebayerSourcenode. Go to ExperimentalMatrix-Drangescalenode. Go to Experimental > Varicam

These 3 nodes will do all the image processing and de-Bayer/de-mosaic the raw input data.

For video input, add AJACard node then add AJAInRGB node. Connect AJACard Device output pin to AJAInRGB node's Device input pin.

For Raw input, a new AJAInRGB node must used. Go to Experimental > Media I/O. This is the point where raw data from the camera is accessed.

Now add 3 new nodes (in the order as shown above) which will do all the image processing and de-Bayer/de-mosaic the raw input data.

Connect AJAInRGB Device > VaricamDelog Input pin.

Then connect VaricamDelog Output > DebayerSource Input pin.

Then connect DebayerSource Output > Matrix-Drangescale Input pin and finally Matrix-Drangescale Output pin to Mixer node or any other node in the compositing pipeline depending on what you would like to compose.

Output from Matrix-Drangescale finally gives the processed de-Bayered/de-mosaic output which can be viewed on any SDI or HDMI monitors just like any other non raw video source.

The below image shows Raw data processing nodes from input to output. In other words, simply assume that Video coming Matrix-Drangescale Output pin is from AJA's input. And the output from Matrix-Drangescale Output pin goes to other the input pin of other nodes.

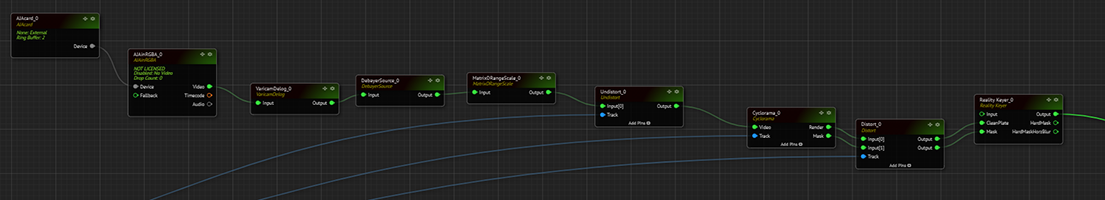

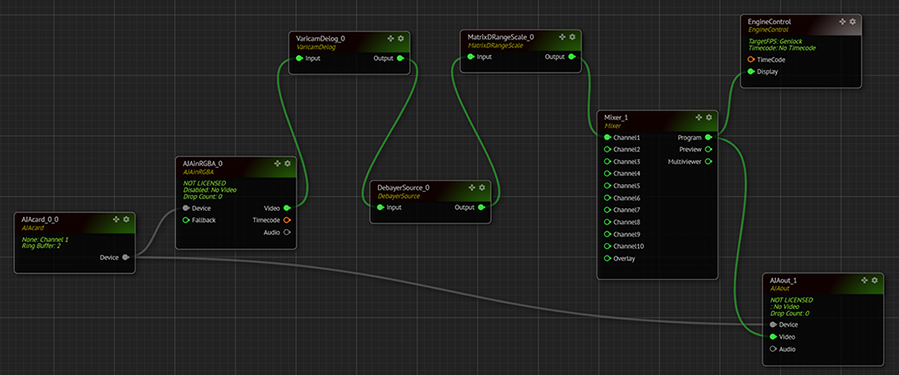

The below image shows Raw data processing nodes from input to output with the use of other nodes, in this example, it is composited with Cyclorama for keyer.